| Current version: 0.45.1 |

Current version: 1.30.0

|

Current version: 1.55.0.0

|

Current version: 0.26.0.0 |

|

|

|

Current version: 1.5.4

|

Current version: 0.3.0 |

Current version: 0.3.0

|

Current Version: 1.23.0

|

| |

|  Introduction to Graphics Controllers

By Jérôme 'JeGX' GUINOT - The oZone3D Team

jegx [at] ozone3d [dot] net

Initial draft: 20 Aug, 2005

Last update: February 23, 2006

Translated from french by Jacqueline ESHAGHPOUR - jean25 [at] freesurf [dot] ch

"When I have a problem on an nVidia, I assume that it is my fault. With anyone else's drivers, I assume it is their fault."

- John Carmack -

1 - Introduction

The graphics card is one of the fundamental elements of the graphics chain. The latest generations of graphics cards have become

so complex (millions of transistors, GPU, memories of a number of hundreds of mega-octets, programming language, ..) that they resemble

real miniaturised computers. To this extent, the 3D engine, another fundamental link in the graphics chain, boasts itself as being

the graphics card’s operating system as it allows the developer to profit of its resources to the maximum.

fig. 1 - nVidia GeForce 7800 GT PCI ExpressThe graphics card’s place has become more and more important in the current computer and to such a point that the next

version of the Windows (Vista) operrating system, expected in 2006, will require a card supporting DirectX9 in order to run the new

Microsoft OS user interface AeroGlass.

As soon as one touches on the realtime 3D field, the graphics card arrives in first place, whereas for most of the classic

applications (word processing, spreadsheets, databases, internet, ...), it is secondary. Why?

The aim of realtime 3D is to render 3D scenes with the maximum credibility possible. And today this credibility can be confounded with reality as

produced by ray-tracing software such as that shown in the following image:

fig. 2 - Water Reflexion – Demoniak3D Demo-SystemTo attain this level of reality, the algorithms used are always more complex and their use requires more and more

calculating power. Not long ago, this calculating power was entirely furnished by the computer’s principal processor (or CPU).

And this up to the time when nVidia, already known for its RIVA chipsets, introduced the first graphics chip capable of

taking charge of a part of the calculus: the reknowned GeForce 256. And suddenly nVidia took the opportunity to create a new 3 letter

acronym: GPU (Graphics Processing Unit). Shortly afterwards, the most serious competitor of nVidia, which is none other than ATI, also

imposed its terminology: VPU (Visual Processing Unit). However, as is often the case, it is the first acronym that one retains.

Without entering into too much detail, the novelty introduced by GeForce 256 is T&L still named Transform And Lighting module.

The T&L is a small calculation unit, the sole role of which is to take charge of the calculus necessary for the transformation of

the vertices (the summits of 3D objects) and of those for the lighting applied to these vertices. This calculus is none other than

vectorial calculus (that is to say that the entities entering into this calculus are vectors) and bitmaps (matrix concatenation, matrix and

vector multiplication). All these calculations are rather large (you just need to open a maths book at the vector calculus chapter to be

convinced). The calculation is repeated for each vertex (a single 3D summit) which means that as soon as a 3D object is

dense (composed of a large number of vertices) the CPU on its own can no longer follow the rhythm and maintain fluidity

on the screen. One considers that a 3D scene is fluid when the number of frames per second (FPS) is over 30. The idea, therefore,

was to transfer these calculations onto a graphics card and nVidia created the event with GeForce 256.

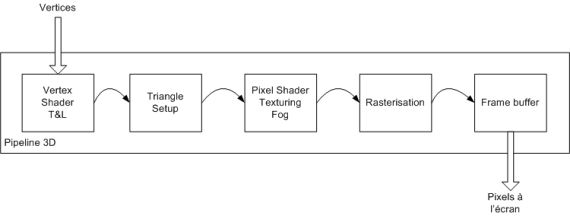

fig. 3 - Schéma simplifié du pipeline 3DThis principle remained in force until the introduction of programable shaders such as the GeForces 3, 4 and more especially FX.

Prior to the introduction of programable shaders, les fonctions of the T&L and Texturing modules were hardware implemented and engraved

on a silicium chip for eternity. Rapidly one noticed that the 3D renderings all resembled each other: even when the 3D objects or the

textures were different, the scene’s global atmosphere was similar. And this, in our quest for reality by using more and more impressive

special effects, was intolerable!

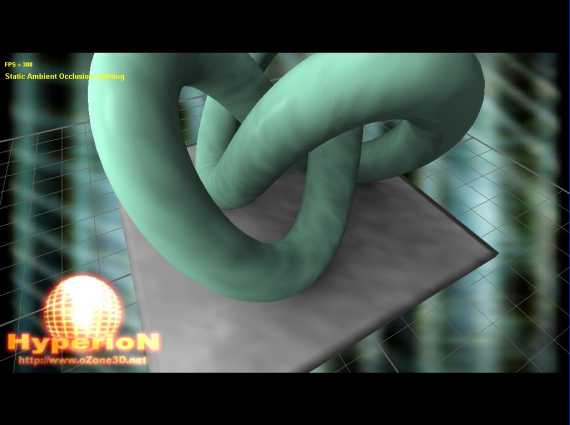

It was therefore necessary to solve this problem. And the reply did not take long: simply permit 3D solution developers (3D

engines principally) to reprogramme the set parts of the 3D pipeline. Of course reprogramming the T&L and Texturing modules is not an

easy task but it is definitely worthwhile as shown in figure 3 (water reflection) or yet in figure 4:

fig. 4 - Realistic rendering using the vertex and pixel shaders.To reprogramme the card’s hardware implementation functions, two strange characters made their apparition in the agitated realtime

3D landscape: the vertex shader (or vertex processor) and the pixel shader (or pixel processor). The vertex shader’s role is

to shortcircuit the T&L module and the pixel shader’s role is to shortcircuit the Texturing module:

fig. 5 - Vertex and Pixel Shaders.These vertex and pixels shaders, also called programable shaders, were initially programmed in the graphic processor’s language,

that is to say assembler. As usual, the GPU assembler permitted almost complete control of the instructions (necessary to reduce the number

of instructions and thus increase the performance) but was relatively difficult to write, to read (just try to understand the funtionning

of a reflection/refraction algorithm coded in part with DP3, ADD, RSQ and other MOV and MAD) and moreover to maintain. Because a

programme is alive and one must let it evolve.

Thus to raise the language level, and therefore that of the intention, languages of a high level called HLSL for High Level

Shading Language have been created. The support of these languages is very recent (about a year) and it has become the new Eldorado of

the 3D programming gurus.

For example, let us see the difference between the assembler code and that of HLSL to standardise a vector.H. If you do not

know what normalizing a vector signifies, it is not important. What is important is to see the evolution of the languages and their

differences.

Assembleur: DP3 H.w, H, H;

RSQ H.w, H.w;

MUL H, H, H.w; HLSL: normalize(H); I think it is sufficiently clear.

Now that you have seen the hardware side of the graphics cards (even if we have not evoqued the terms AGP or PCI-Express which

strictly concern the hardware side), we will talk about the standards which regulate the world of 3D graphics cards. Two names come

to mind immediately: OpenGL and Direct3D.

The current graphics cards, be they equipped with chipsets from nVidia (GeForce series) or from ATI (Radeon series), support

these 2 standards. What is the use of these standards and why are there two?

From the point of view of the 3D solutions developer, the graphics card is a small computer which one must be able to programme in

order to subdue these millions of transistors and show 3D scenes as quickly as lightning such as those seen in the latest games, for instance

Doom 3 (figure 6), Need For Speed Underground 2 (figure 7) or Serious Sam 2 (figure 8 – really excellent for letting off steam that one!).

fig.6 - Doom3 – Copyright 2004 ID Software.

fig. 7 - Need For Speed Underground 2 – Copyright 2004 Electronic Art Inc.

fig. 8 - Serious Sam 2 – Copyright 2005 CroteamThe graphics card is hardware which is under the control of the computer’s operating system. One therefore cannot have

access to it to programme it directly. The modern operating systems such as Windows or Linux run all the peripherals with particular

software layers called drivers.

Fig. 9 - Laison entre une application et la carte graphiqueTherefore in order to be able to acceed to the card and to drive it (really!), you need to dispose of good drivers.

By default, the Windows system installs drivers called generic drivers because they are valid for all graphics cards. The graphics cards,

no matter where they come from, have the same basic functions (amongst others that of showing a pixel on the screen) and therefore a

generic driver is capable of running the graphics card’s basic functions (luckily, otherwise we would only have a miserable

black screen...).

But to use the particular specifications of each board, you must know them. And as it is not possible for the operating system

to know every card of each manufacturer, it is up to the driver, fournished with the graphics card, to make the graphics chip’s inherent

functions available in a standardised manner.

Once the graphics drivers installed, the 3D applications programmer can finally take control of the GPU and make the pixels danse on

the screen. To do so, he can use the two standards offered by a standardised programming interface (that is to say that the

programming will be the same no matter which graphics card is used): OpenGL and Direct3D. These two standards are implemented in the

graphics drivers by the card’s creator (nVidia or ATI).

OpenGL is considered by the 3D programming puristse as being the realtime 3D standard. OpenGLhas its origin in the first

API (Application Programming Interface – another name for standard) developed by SiliconGraphics at the beginning of the nineties:

IrisGL. This API rapidly became so popular with the SGI developers (acronym for SiliconGraphics) that SGI decided to make it

an open standard. OpenGL is born (OpenGL = Open Graphics Library).

fig. 10 - Le site officiel: http://www.opengl.orgOpenGL is the 3D standard used on most of the operating systems (Windows, Unix, Linux, ...) and very often the only one

available (MacOS, Linux, Symbian, ...). The most recent version of OpenGL is the 2.0. This version supports all the latest 3D

technological innovations.

At the end of the nineties, Microsoft was obliged to react in order to attract the greatest possible number of video game developpers

to its flagship product, I mean Windows. The problem was that at the end of the nineties, graphics programming under Windows was far from

being operational. OpenGL was there but was more especially implemented in the industrial sector (work stations under Unix) and was very

badly (or even not at all) supported by general public solutions. There were therefore very few means for video game developers under

Windows. Microsoft took note of this and proposed DirectX, a multimedia applications programming library under Windows. DirectX permits

one to programme the sound card, the keyboard, the mouse, the network, and above all the 3D card. The programming section of the 3D

card is called Direct3D. DirectX, and moreso Direct3D, are only available under Windows. Let us say, for argument’s sake, that

this is the essential difference between the two standards.

fig. 11 - The official site: http://www.microsoft.com/windows/directx/default.aspxIn fact, OpenGL and Direct3D have evolved in a similar fashion and, like good wine, they have improved over the years.

The 2 standards proposed practically the same functions for attacking the graphics card and are perfectly tolerated by all the 3D graphics

cards on the market (particularly those equipped with the nVidia or ATI chipsets).

Video games use either one of these standards. It is therefore fundamental to update the graphics card’s driver in order

to be able to run OpenGL or Direct3D and to fully benefit from the latest games. At the time of writing these lines, these 2 manufacturers

propose the following versions of their drivers:

Forceware 78.01 pour nVidia – available at www.nvidia.com

Catalyst 5.9 pour ATI - available at www.ati.com

But let us not forget that the drivers serve only to make the functions of the graphics card accessible in a standardised manner.

This means that if the card does not have these functions (for example tolerating the vertex and pixel shader) the driver cannot change

anything. Therefore depending on the application that one would like to use (3D modelisation software, video games, or Demoniak3D ...) one

must correctly choose one’s 3D card among the overabundance existing on the market and then update the graphics driver with the latest

version available on the manufacturer’s site.

Using the latest version is important for 2 reasons:

- the addition of new functions

- the correction of bugs

To convince you, here is an example of a bug on a new function which was rampant on all ATI Catalysts. It concerns the support

of the new (old now!) function called point_sprite. This function is very useful with the particle system which permits acceleration

of the rendering.

Fig. 12 - ATI Radeon 9800 Pro board equipped with the latest Catalyst >= 5.2 drivers

fig. 13 - ATI Radeon 9800 Pro board equipped with the Catalyst 4.1 driversAs you can see in figure 13, the texture rendering is completely deformed on a large portion of the screen.

This bug was corrected in Catalyst 5.2.

This example shows how important it is to update the graphics card’s drivers as soon as new versions are available.

Another point to approach is that of the framerate or more simply FPS (Frames Per Second). This indicator is simply the reference

value when you need to know if a graphics card is correctly set up, if the drivers are well installed, and if the card is sufficiently

powerful to run the 3D application software (but the latter depends a lot on the 3D application and the 3D driver: if the application

is badly coded and not optimised, even a high class 3D card will not work).

The FPS represents the number of frames per second. A frame is the classic name for a screen rendering. When one speaks of 60 FPS,

this means that the graphics system is capable of rendering the 3D scene 60 times per second on the screen.

The 3D benchmarks (these famous softwares that are used to measure the performances of 3D cards) used the FPS to a large extent in

the final result. For example, let us take 3DMark2005, edited by www.futuremark.com.

If you look more closely, you will notice that the final result is given by the following relationship: 3DMark score = pow((FPS1 * FPS2 * FPS3), 0.33) * 250 where pow() is the mathematical operator power (pow(x, n) reads x power n), and FPS1, FPS2 and FPS3 are respectively the FPS

of parts 1, 2 and 3 of the benchmark.

The only inconvenience of a benchmark such as this, is the complexity of the setting up (downloading, installation and benchmark

runtime).

The problem with classic benchmarks is that they give a global result of the graphics system’s performance. But each graphics card is

different and therefore proposes different performances at each level of the 3D pipeline. These cards perform very well at the

T&L module level, others at the Texturing level, yet others manage memory transfer well (particularly interesting if the application uses a lot

of dynamic textures).

It is for this reason that the benchmarks from the Suffocate series were created. They permit a rapid estimation of the

graphics chain’s performances. The Suffocate benchmarks were developed with the help of the Demoniak3D demo-system. Each benchmark is

delivered with its source code which permits one to rapidly modify it to adapt it to one’s personal needs. The Suffocate benchmarks give

an extremely simple result: the number of frames rendered during the demo’s time (in general, each demo lasts 60 seconds) and the

average number of FPS. Each benchmark measures a particular characteristic:

- Suffocate SB01: this bench uses the shadow volumes and the particle system and is fairly general.

The number of FPS is closely linked with the idea of fluidity. How does one know if a realtime 3D rendering is fluid? Simply by

looking at the number of FPS whenever this is possible. Perfect fluidity is obtained as soon as one exceeds 50-60 FPS. At such a

level, the 3D application’s time of reaction to the user’s input is imperceptible and the notion of realtime has complete signification. A

FPS of 20 for example, permits us to appreciate a video game but one will have a sensation of slackening due to this low FPS. Below

10 FPS, the 3D application is no longer realtime and rather tends towards a slide-show!

A last little thing to say: as there are 2 standards for 3D, OpenGL and Direct3D, benchmarks must therefore exist for each of

these two standards. Effectively, it is the case. But the greater part of the benchmarks is developed for Direct3D since the majority

of video games for PC run this API. 3DMark2005 is totally oriented Direct3D. The Suffocate series is oriented OpenGL for the moment but one

may expect that a Direct3D version will be available seeing that the oZone3D engine, which is hidden behind, runs both API. Remains

the coding of the Direct3D plugin... 7 - Architecture and Functions

In order to choose one’s graphics card and to master it, it is important to know the 3D card’s basic architecture and

fonctions.

As we explained earlier, the modern 3D cards support OpenGL as well as Direct3D. For a simple reason: the 3D pipeline is the

same no matter which API is used. Therefore the technical characteristics of a 3D card are valid for both 2 API. Here we go, let’s

begin to examine our hardware.

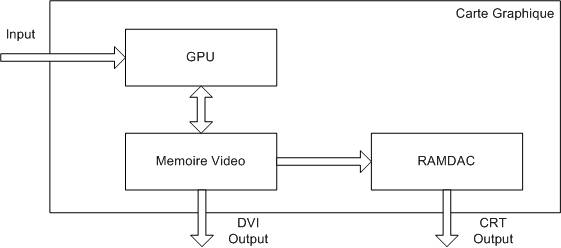

First of all, we will look at the simplified hardware scheme which is present on a graphics card. This scheme is valid for

all cards. One can distinguish the 3 main components: the GPU, the video random access memory and the RAMDAC.

fig. 17 - Simplified anatomy of the graphics cardThe RAMDAC

Let us begin with the last one, that is to say the RAMDAC. RAMDAC signifies Random Access Memory Digital Analogic Converter.

The RAMDAC permits the conversion of numeric data from the video random access memory (commonly named frame buffer) into analogical data

which will be sent to the screen. The performances of the RAMDAC, which are given in Mhz, are to be studied with care.

The functioning frequence of the RAMDAC is calculated taking into consideration the final resolution and the frequency of the vertical

refreshing with the following relationship: Freq RAMDAC = width * height * Vfreq * 1.32 where width and height are respectively the width and height of the screen in pixels (1024x768, 1280x1024, ...), Vfreq is the

refreshing frequence and 1.32 is a factor which takes into account the inertia of the cathode ray tube’s electron gun (the gun loses

roughly 32% of its time in horizontal and vertical movements). Armed with this relationship, let us make a few small calculations.

For a resolution of 800x600 and a Vfreq of 75Hz we obtain a RAMDAC frequence of: RAMDAC Freq = 800 * 600 * 75 * 1.32

RAMDAC Freq = 47520000 Hz soit 47.52 Mhz.

With a resolution of 1280x1024 à 85Hz the frequence becomes: RAMDAC Freq = 1280 * 1024 * 85 * 1.32

RAMDAC Freq = 147062784 Hz let us say 147 Mhz.

On many modern cards, the RAMDAC frequency is roughly 400 Mhz. A rapid calculation tells us that in order to fully run such

RAMDAC in 1600x1200 (the resolution of the workstations in the field of professional graphics), one must have a screen with a

refreshing frequency of 160 Hz. This type of screen is rather rare...

Therefore the actual RAMDAC frequency of 3D cards is amply sufficient. But the RAMDAC is only necessary for CRT type

screens (cathode ray tube). In the case of numeric screens (flat screens), the RAMDAC has no use because analogical numeric

conversion is useless. The video output in this case is directly connected to the graphics card’s framebuffer. It is for this reason

that the recent graphics cards dispose normally of two outputs: the CRT output for the classic cathode ray tude screens and

the DVI output (Digital Video Interface) for numeric screens. The video memory

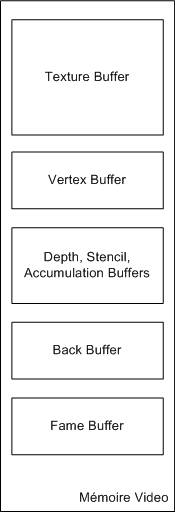

Now, let us have a look at the video memory. This memory’s principal function is to stock all the data that the graphics

processor (the GPU) must deal with. It goes without saying that the quantity of memory is an important factor even if today it is

rare to find graphics cards with less than 128M of video RAM. But what data are we speaking of exactly ?

For classic applications, the only data stocked in the video memory is the screens contents. This data is registered in a

particular zone called frame buffer. Our computer’s screen permits us to visualise the contents of the frame buffer directly

(and this is even more so for numeric screens). Let us be mad and suppose that we are doing office automation with a

resolution of 1600x1200 in true color, that is to say that each pixel shown on the screen is coded on 3 bytes

(1 byte for each component Red, Green and Blue). The quantity of memory necessary for the frame buffer is: FrameBufferSize = 1600 * 1200 * 3

FrameBufferSize = 5760000 let us say 5.7M. Is it really useful to have a graphics card with 128M memory to do office automation?

The reply is no.

But luckily realtime 3D is there to use all this enormous memory...

As far as 3D applications are concerned, things become complicated, as always in the 3D world.

For a 3D application (a video game, or more simply Demoniak3D) the memory is used entirely and intensively.

In fact the memory is compartmented and each zone has a precise role. There are 5 large zones:

- The frame buffer: it is here that the definite image which will be shown on the screen is stocked. The frame buffer

is also called color buffer.

- The back buffer: this is the memory zone which stocks the rendersing of a scene during its creation. Once the rendering

of the scene is finished, the back buffer becomes the frame buffer and vice versa. This technique is known as double buffering and permits

stable and net posting. The back buffer is the same size as the frame buffer.

- The depth, stencil and accumulation buffers: the depth and stencil buffers are fundamental in the rendering of a

3D scene. The first, the depth buffer, is capital because thanks to it we will be able to show 3D objects in a correct manner, that is

to say that the far distant objects will not be shown in front of those which are the closest to the camera. The depth buffer is

also called Z-Buffer. The stencil buffer is greatly employed for all sorts of graphics effects of which the most in vogue at the

moment are the famous shadow volumes. The accumulation buffer, as far as it is concerned, is mainly used for realtime soft-focus

effects. The size of the depth buffer depends on the precision of the test, 16 or 32 bits. In the latter case, the size of the depth

buffer is calculated by width x height * 4.

- The vertex buffer: memory zone which has appeared in the latest generations of 3D cards and which are made to stock the

3D objects’ vertices (VBO or Vertex Buffer Object in OpenGL). The size is variable.

- The texture buffer: this zone is the largest because it is here that all the textures used by the 3D application will

be stocked. When one knows that one texture only of 2048x2048 in 24 bits occupies roughly 12Mo, one easily understands why the

texture buffer is given the lion’s share of the video memory.

fig. 18 - Organisation of the video memoryWith this new info in mind, let us make a new calculation of the memory occupation for a 3D application using 4 textures of

2048x2048 in 24 bits/pixel, with a display resolution of 1600x1200 in 24 bits/pixel, and of course using double buffering for

the display and the depth buffer: mem_occupation = (2048*2048*3)*4 + (1600*1200*3)*2 + (1600*1200*4)

mem_occupation = 37748736 + 11520000 + 7680000

mem_occupation = 56 948 736 let us say roughly 60M! Now we begin to understand the reason for the increase in the graphics card’s memory...

fig. 19 - Private Museum DemoThe demo in figure 19, Private Museum is an example of the use

of the frame buffer, back buffer, vertex buffer, stencil buffer and texture buffer. The graphic user processor or GPU We have kept the best for the last: the GPU. The GPU has already been fairly well detailed at the beginning of this

chapter. The functioning of a modern GPU can be visualised by a pipeline graphic model illustrated in figure 20:

fig. 20– Modelling of a GPUThe GPU, which for memory signifies Graphics Processing Unit, is the graphics card’s principle feature. The GPU is responsible

for all the 3D calculations: transformation of the vertices (rotation, translation and scaling), calculation of the lighting, creation of

triangles, texturing and other effects such as fog, rasterisation (transformation of the triangle into pixels in the frame buffer).

The notion of a triangle is relatively important because modern 3D cards are specially optimised for the rendering of triangles.

The triangle is the basic geometric primitive (of course after the vertex and the line). All 3D forms may be modelised in the form of

triangles. No matter which way to modelise a 3D form (polygonal, nurbs, ...), this form is finally converted into triangles by the 3D

engine before being presented to the graphic card. Moreover the triangle is the most rapid primitive to render (drawing and filling): it

is quicker to render 2 triangles rather than a quadrangle (primitve base having 4 sides) forming a square.

The functions of the GPU are numerous (vertex and pixel shader, shader model, trilinear and anisotropic filtering, anti-aliasing,

vertex buffer, cube and bump mapping, and yet others). We need not mention here all that exceed the subject of this chapter. Each of

the functions of the GPU will be seen in detail in the other tutorials available on the site.

Each type of GPU possesses a code name which it is necessary to know because one comes across them fairly often (the technical

characteristics of the card, specialised magazines, web sites, ...). I will only mention the code names of the two main manufacturers:

nVidia et ATI.

In the case of nVidia, the code name of each GPU is composede of NV followed by the model number:

Update: February 23, 2006:

NV04 = nVidia RIVA TNT

NV05 = nVidia RIVA TNT2

NV10 = nVidia GeForce 256

NV11 = nVidia GeForce2 MX/MX 400 / Quadro2 MXR/EX

NV15 = nVidia GeForce2 GTS / GeForce2 Pro / Ultra / Quadro2 Pro

NV17 = nVidia GeForce4 MX 440 / Quadro4 550 XG

NV18 = nVidia GeForce4 MX 440 with AGP8X

NV20 = nVidia GeForce3

NV25 = nVidia GeForce4 Ti

NV28 = nVidia GeForce4 Ti 4800

NV30 = nVidia GeForce FX 5800 / Quadro FX 2000 / Quadro FX 1000

NV31 = nVidia GeForce FX 5600

NV34 = nVidia GeForce FX 5200

NV35 = nVidia GeForce FX 5950 Ultra

NV36 = nVidia GeForce FX 5700 / FX 5700 Ultra / FX 5750 / Quadro FX 1100

NV38 = nVidia GeForce FX 5950 Ultra

NV40 = nVidia GeForce 6800 / 6800 GT / 6800 Ultra / Quadro FX 4000

NV41 = nVidia Quadro FX 1400

NV43 = nVidia GeForce 6200 / 6600 / 6600 GT / Quadro FX 540

G70 = nVidia GeForce 7800 GT / 7800 GTX / 7800 GS

G72 = nVidia GeForce 7300 GS

In the case of ATI, it is the same principle except that one replaces the NV by R: R100 = ATI Radeon (generic) or 7200

R200 = ATI Radeon 8500 / 9100 / 9100 Pro

RV200 = ATI Radeon 7500

R250 = ATI Radeon 9000 / 9000 Pro

RV280 = ATI Radeon 9200 / 9200 Pro / 9250

R300 = ATI Radeon 9500 / 9700 / 9700 Pro

R350 = ATI Radeon 9800 / 9800 SE / 9800 Pro

R360 = ATI Radeon 9800 XT

RV350 = ATI Radeon 9550 / 9600 Pro

RV360 = ATI Radeon 9600 XT

RV370 = ATI X300

RV380 = ATI X600 Pro /X600 XT

RV410 = ATI X700 Pro / X700 XT

R420 = ATI X800 / X800 Pro / X800 GT

R423 = ATI X800 XT / X800 XT PE

R480 = ATI X850 XT / X850 XT PE - PCI-Exp

R481 = ATI X850 XT / X850 XT PE - AGP

RV515 = ATI X1300 / X1300 Pro

RV530 = ATI X1600 / X1600 Pro

R520 = ATI X1800 XT / X1800 XL

R580 = ATI X1900 XT / X1900 XTX

|

|

| |