The latest Catalyst version is the 7.12 (the Cat7.12 internal number is 8.442.0.0). But exactly like the Cat7.11, these drivers have a bug in the management of dynamic lights in GLSL. But this time, I searhed for the source of bug because this bug is a little bit cumbersome in Demoniak3D demos. And we can’t use a previous version since Cat7.11+ are required to drive the radeon 3k (HD 3870/3850). Then I’ve coded a small Demoniak3D script that shows the bug. This script displays a mesh plane lit by a single dynamic light. The key SPACE allows to switch the GLSL shader: from the bug-ridden to the fixed and inversely.

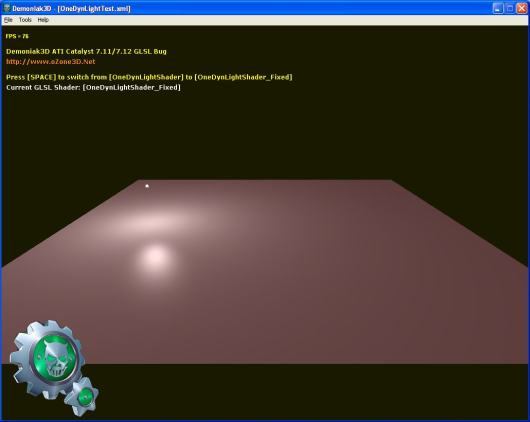

– The following image shows the plane enlightened with the fixed shader:

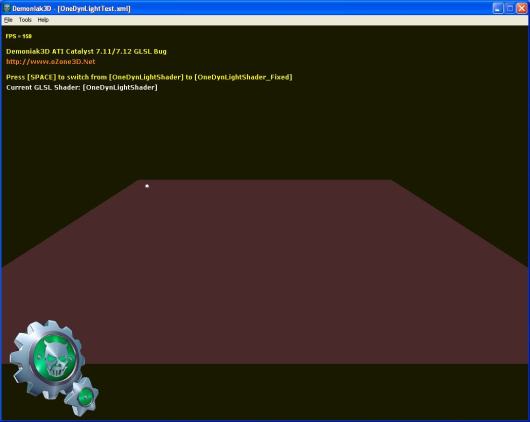

– The following image shows the plane lit with the bug-ridden shader:

Okay that’s cool, but where does the bug come from ? After a little time spent on shaders tweaking, my conclusion is that the bug is localized in the value of the built-in uniform variable gl_LightSource[0].position. In the vertex shader, this variable should contain the light position in camera space. It’s OpenGL that does this transformation, and we, poor developers, just need to specify somwhere in the C++ app the light position in world coordinates. In the vertex shader, gl_LightSource[0].position helps us to get the light direction used later in the pixel shader:

lightDir = gl_LightSource[0].position.xyz - vVertex;

With the Catalyst 7.11 and 7.12, the value stored in gl_LightSource[0].position is wrong. Then, one workaround, until the ATI driver team fix the bug, is to manually compute the light pos in camera space by passing to the vertex shader the camera view matrix and the light pos in world coord:

vec3 lightPosEye = vec3(mv * vec4(-150.0, 50.0, 0.0, 1.0)); lightDir = lightPosEye - vVertex;

mv is the 4×4 view matrix and vec4(-150.0, 50.0, 0.0, 1.0) is the hard coded light pos in world coord.

In the fixed pipeline, dynamic lights are well handled as shown in the next image:

In the Demoniak3D demo, the bug-ridden GLSL shader is called OneDynLightShader and the fixed one OneDynLightShader_Fixed. The demo source code is localized in the OneDynLightTest.xml file. To start the demo, unzip the archive in a directory and launch

DEMO_Catalyst_Bug.exe.

The demo is downloadable here: Demoniak3D Catalyst 7.11/7.12 Bug

This bug seems to affect all radeons BUT under Windows XP only. Seems as if ATI is forcing people to switch to Vista. Not cool… Or maybe ATI begins to implement OpenGL 3.0 in the Win XP drivers. Do not forget that with OpenGL 3.0 as with DX10, the fixed functions of the 3D pipeline like the management of dynamic lights will be removed.